Mom's House VR

September 2018 - December 2018

Click here to download

Project Website

OVERVIEW

Mom's House VR was a VR project made for the Oculus Rift at Simon Fraser University. This project was conceived and designed in a team of four, which consisted of myself, Bob Wang, Jimmy Tse and Carmen Li. Mom's House is a virtual reality video game developed for Microsoft Windows, and takes advantage of the Oculus Rift platform for in-game interaction. Given my prior experience with the Unity, I took up both a leadership and programming role on the team.

Particles were used to highlight interactable objects.

MY ROLE

User Experience

Some of my tasks included implementing users interactions like picking up and throwing objects and moving around the scene. Most of this required modifying some of the pre-existing scripts that come with the Oculus Integration asset. I also implemented visual cues and audio feedback to decrease any confusion in the overall experience. Some examples include a metallic trash can sound when garbage is dropped in the recycling bin, and some particles around objects that users are intended to throw away.

The user is rewarded with green particles when they successfully throw garbage into the bin.

CHALLENGES

Out of Range Grabbable Objects

Multiple challenges occurred during the implementation of user interactions in our game. The gameplay of Mom’s House requires the user to pick up many pieces of trash and cans in order to succeed. This means this interaction must be intuitive for the users so that they will not get frustrated trying to pick something up . After research on different interaction types, we decided on an object snapping interaction model so that users wouldn't need to bend down the pick up objects off the floor.

Object snapping makes it easier for users to grab object just out of reach.

Conflicting Orientation of Camera Rig and Virtual Hands

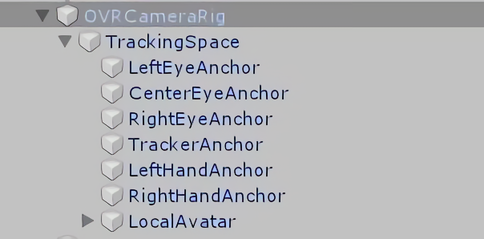

Furthermore, there was an issue with the users’s hands not following the orientation of the head mounted display. Originally, we had created the hands as a separate object that would transform in the same position as wherever the users position was. While users could grab objects that were in front of them, it also meant that even if a users was looking at an object while rotated, they couldn’t pick it up because the hands did not rotate as well. We were able to fix this by creating a parent Avatar (which held everything from hands, body colliders, held objects) and making that a child of OVRCameraRig. Essentially, we created a container that would always follow the orientation of the head mounted display, whether it was position or rotation.

The LocalAvatar gamobject had to be a child object of OVRCameraRig.